CMU Researchers, Argo AI Predict Future With Lidar Data

Carl Franzen | Argo AIFriday, June 24, 2022Print this page.

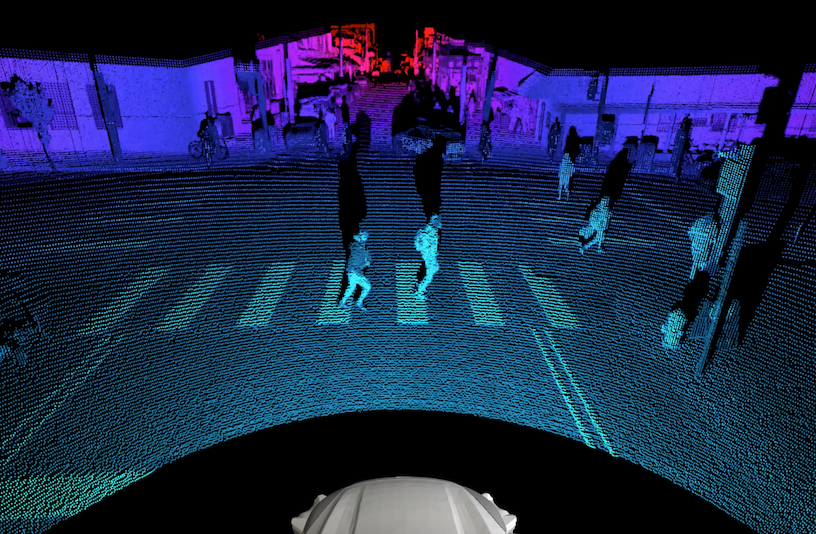

Researchers at Carnegie Mellon University's Argo AI Center for Autonomous Vehicle Research developed a system to use lidar data to visualize not just where other moving objects are on the roads now, but also where they are likely to be in a few seconds. This method enables autonomous vehicles (AVs) to better plan for the motions of cars, pedestrians and other moving objects around it.

Using the moving object's current position, velocity and trajectory, their method, FutureDet, creates several possible future paths in lidar, ranks them by the confidence it has that the moving object will follow the predicted path, and shows all these paths to the AV as if they were occurring in real-time. FutureDet then sees how the AV plans to respond to each path.

"This method basically takes in lidar measurements and spits out the possible future locations of objects," said Deva Ramanan, a professor in the School of Computer Science's Robotics Institute, head of the Argo AI Center, an Argo principal scientist and the supervising researcher on the paper.

FutureDet differs from previous computer science techniques for handling lidar data that use a more modular approach. In those methods, lidar data passes through several distinct modules of other computer programs before it can influence what driving actions an AV will take. For example, lidar data comes into an AV's computer systems and passes through modules including detection, motion tracking and forecasting. The lidar data is combined with other types of data in each module, and the self-driving system ultimately uses this combination of data to chart its course.

By contrast, FutureDet can be seen as a step toward end-to-end processing — meaning lidar data comes into an AV's computers on one end and a driving instruction comes out the other. However, such black-box, end-to-end approaches may be difficult to interpret and analyze. Instead, FutureDet processes the lidar data to understand the world and how it will evolve, leaving the decision of where the AV should and shouldn't drive to a downstream motion planner.

FutureDet's other significant innovation is that it predicts multiple future paths of moving objects. To do so, the system repurposes spatial heatmaps that can reason about the current locations of multiple moving objects detected with lidar in the present and estimate their locations in the future.

This feature allows the lidar detection system to react to future events on its own without requiring separate modules. The lidar system is already built to detect where objects are around it; FutureDet gives it the same kind of object data, only that data tells the system where objects are likely to be in the future.

"Essentially, we take a moving object's previous one second of lidar data and give it to a neural network, and ask it where the object will be next, and it gives us three or four possible paths," Ramanan said.

A neural network is a type of artificial intelligence program that learns about subjects by analyzing numerous examples. In this case, those examples include hundreds of thousands of four-second clips of lidar recordings from AVs driving down the street, in which the objects around the AV have been prelabeled by human analysts as other vehicles, cyclists, pedestrians and more. The neural network learns to recognize these distinct objects and categorize them. It can then recognize them when it sees new versions on the roads or in a simulation.

FutureDet is still an early stage method and will need to be further studied in computer simulations before it can be tested on any real-world AVs. But it has already been tested on open-source lidar data from the AV company Motional's NuScenes dataset, available freely online, and will soon be tested on lidar data from Argo's Argoverse open-source dataset. In these initial tests, FutureDet achieved 4% greater accuracy in forecasting the future paths of objects that weren't moving in straight lines compared to previous methods.

"That is definitely the goal of all this research, to develop new methods that we can ultimately put on the AVs that improve accuracy, reduce the amount of code we're running, and give us even more efficiencies in processing power and energy," Ramanan said.

FutureDet is described in a paper presented this week at the 2022 Conference on Computer Vision and Pattern Recognition (CVPR) in New Orleans. Other co-authors include Argo Principal Scientist and Technical University of Munich Professor Laura Leal-Taxié, Technical University of Munich researcher Aljoša Ošep, CMU researcher Neehar Peri, and Jonathan Lutien from RWTH Aachen University.

Read more about research at CVPR by CMU researchers and Argo engineers on the Ground Truth Autonomy website.

Carl Franzen is a Ground Truth contributor and senior digital media specialist at Argo AI.

Aaron Aupperlee | 412-268-9068 | aaupperlee@cmu.edu