Research Highlight: Sim-to-Real Transfer for Tactile-Based Robot Grasping

Kayla Papakie Wednesday, November 30, 2022Print this page.

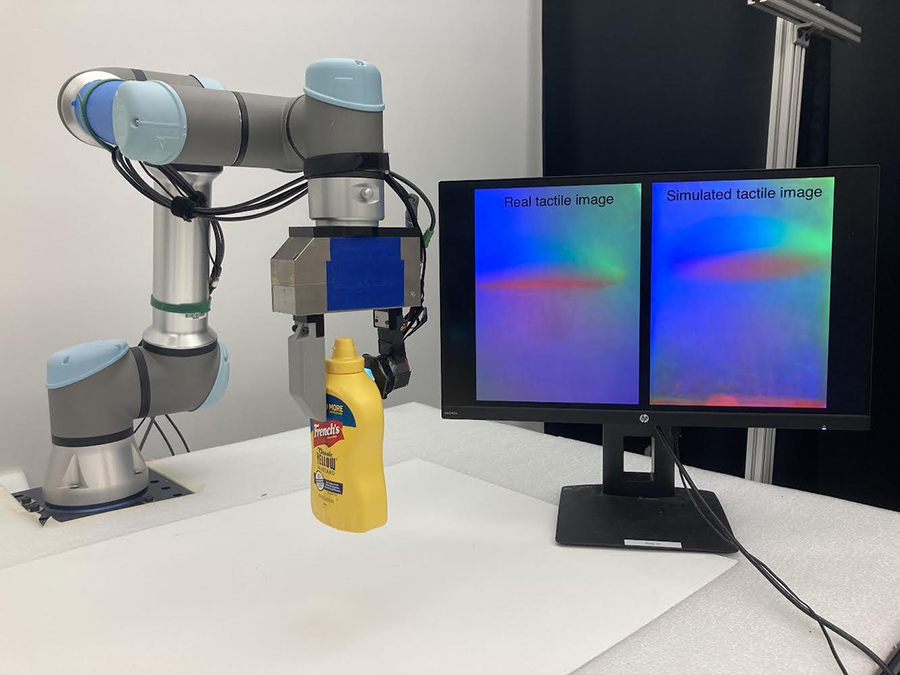

Robot simulation, a tool that enables robots to learn real-life tasks in an entirely computer-generated environment, can lower costs and save time. But most existing simulation frameworks for robots that grasp items lack efficient and realistic tactile models, limiting the robot's ability to apply the tasks it learns to the real world. Research from Carnegie Mellon University's Robotics Institute (RI) aims to eliminate this "sim-to-real" transfer gap by developing a realistic simulator that integrates robot dynamics with vision-based tactile sensors by modeling the physics of contact.

The research team includes Wenzhen Yuan, an assistant professor in RI and director of the RoboTouch Lab; RI Ph.D. students Zilin Si and Arpit Agarwal; Zirui Zhu, an undergraduate student from Tsinghua University; and Stuart Anderson, a Meta research engineer who earned his Ph.D. in robotics in 2013. The team presented its paper, "Grasp Stability Prediction With Sim-to-Real Transfer From Tactile Sensing," in October at the 2022 International Conference on Intelligent Robots and Systems in Kyoto, Japan.

In the paper, the researchers introduce an integrated framework for both training robots to grasp objects in a simulation environment and then transferring that knowledge directly to real-world applications. Their simulation framework integrates physics, contact and tactile data, and the resulting model helped tactile robots grasp objects of various shapes and physical properties with 90.7% accuracy. This work could be used in the future to create simulations for more complicated robot manipulation tasks.

For more information, visit the project's website.

Aaron Aupperlee | 412-268-9068 | aaupperlee@cmu.edu