W-Net Uses AI To Better Understand Ultrasound Imagery Convolutional Neural Network Aims To Assist Doctors With Diagnoses

Megan HarrisThursday, June 30, 2022Print this page.

Precision is paramount in most stages of scientific research, but John Galeotti and colleagues at Carnegie Mellon University recently honed in on the opposite: fuzziness.

Galeotti, a systems scientist with CMU's Robotics Institute and an adjunct assistant professor in biomedical engineering, has developed artificial intelligence to assist doctors making diagnoses from ultrasound imagery.

"Ultrasound is the safest, cheapest and fastest medical imaging modality, but it's also arguably the worst in terms of image clarity," Galeotti said. "The research here is ultimately about teaching AI to process details in an ultrasound, including subtle details and extra information that humans typically don't see. Just by having that extra information, the AI can form better conclusions."

Even for highly trained humans, healthy or distressed tissue can be tough to confidently discern in an ultrasound. Fuzziness could indicate disease or pathology. Beneath the deepest layer of skin, brightness, breaks and indents in various lines may suggest a need for further testing. Until now, those interpretations were based on subjective human analysis.

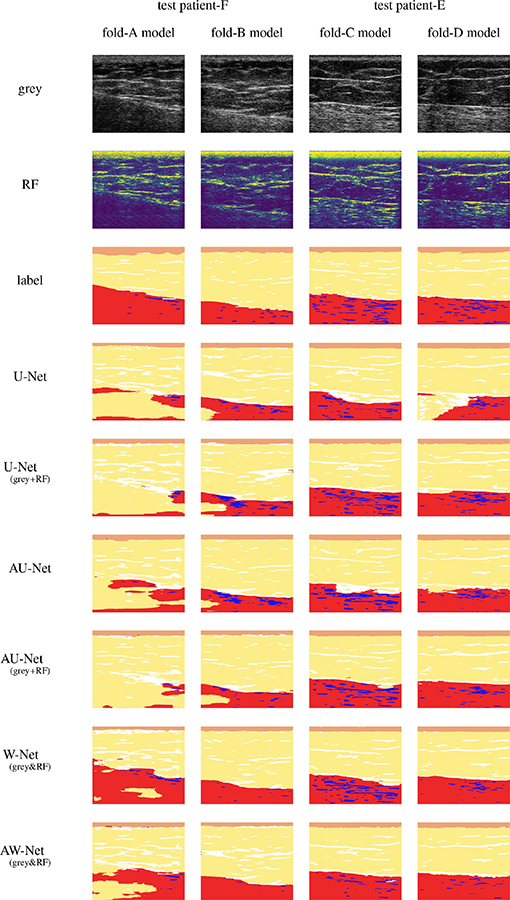

Through interdisciplinary research, collaborations and teaching, Galeotti has been working for years to improve patient outcomes by focusing on the tools of science and medicine. His most recent work introduces W-Net, a novel convolutional neural network (CNN) framework that uses raw ultrasound waveforms in addition to the static ultrasound image to semantically segment and label tissues for anatomical, pathological or other diagnostic purposes. Through machine learning, W-Net aims to standardize diagnoses made through ultrasounds. The journal Medical Image Analysis published the initial results of this research in February.

"To our knowledge, no one has ever seriously tried to ascribe meaning to every pixel of an ultrasound, throughout an entire image, video or scan. AI was pretty bad at doing this before, but thanks to W-Net it has the potential to be much better," Galeotti said.

Initial tests in breast tumor detection show W-Net consistently outperformed established diagnostic AI frameworks. Since the COVID-19 pandemic started, much of the team's work has focused on pulmonary applications.

"As a clinician, I used to look at an ultrasound, and it was completely unintelligible," said Dr. Ricardo Rodriguez, a Baltimore-based plastic surgeon whose initial inquiries led to the creation of W-Net. "It was like a snowstorm. I see something; it's obviously there. But our brains aren't equipped to process it."

Gautam Gare, a Ph.D. candidate working with Galeotti, got an early crash course in radiography — learning from medical professionals how to label components of lung scans and recognize elements that may be cause for concern. He processed thousands of individual scans, each bringing the AI system closer to performing the same tasks on its own.

"It was a big learning curve," Gare said. "When I started, I didn't even know what an ultrasound image looked like. Now I understand it better, and the potential for this research is still really exciting. No one is exploring the raw data exactly the way we are."

In recent months, much of that labeling work has moved to a team of pulmonary specialists at Louisiana State University, under the guidance of Professor of Medicine and Physiology Dr. Ben deBoisblanc.

With Dr. deBoisblanc's group of about a dozen LSU clinicians sharing and labeling lung scans, Galeotti's team has been able to refine the process. Thursday meet-ups give them a chance to adjust and, in turn, improve the technology.

As development progresses, deBoisblanc has one eye on the future — and the market potential.

"Twenty years ago, you had to be a radiologist to understand this stuff. Now I'm pulling a little unit around from patient to patient on my morning rounds," deBoisblanc said. "Imagine a battlefield or an ambulance en route to a hospital, where someone with little to no training could point a device at an injured or incapacitated person and know immediately what might be wrong. It's the perfect time to let AI assist."

Aaron Aupperlee | 412-268-9068 | aaupperlee@cmu.edu